AR Control for Smart Home

February 27, 2018

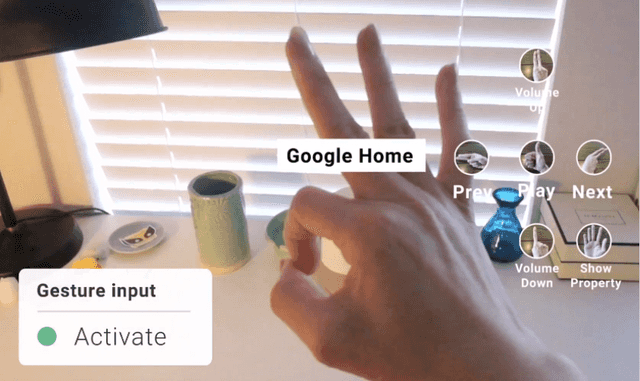

A centralized and contextual AR control interface for your smart home devices. Hand Gesture based interaction framework. Powered by slef-trained Yolo3 model.

Background

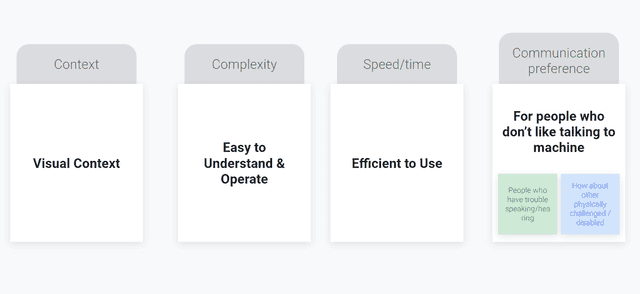

The application of Internet of Things (IoT) has been explored in many possible scenarios. One particular area that might be of interest of a wide variety of users is Smart Home. However, the lack of consensus on standards inevitably leads to technical fragmentation, which practically prevents a centralized approach to control available smart devices. On the other hand, voice control via speakers is on the rise. However, while offering innovative way of interaction, voice control system also proposes new questions in usability. Frustration caused by accent and low information throughput are among the common issues.

Proposal

A system design that integrates augmented reality technology for controlling WiFi enabled smart home devices. While both augmented reality and internet of things (IoT) have gained attention in academia and industry, successful works in combining both and applying in home setting are few, especially in commercial products. The system, which takes advantages of computer vision, experimentally explores the feasibility of augmented reality control in home setting and followed by user studies for design iteration and usability evaluation. A working prototype of the system shows significant decrease in time-to-completion(TTC) when used for non-trivial tasks, for example, meta-data retrieval of a playing song.

Design

Feature requirements

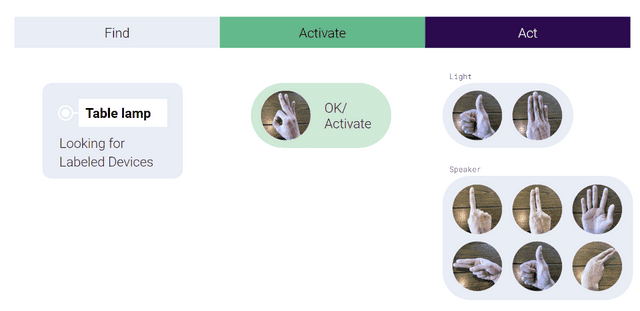

User Behavior Stage

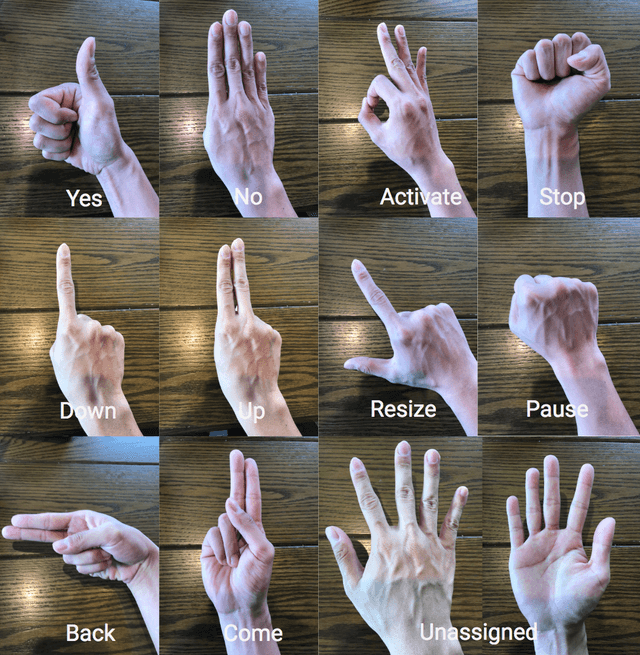

Hand Gesture Framework

AR Interface

Architecture

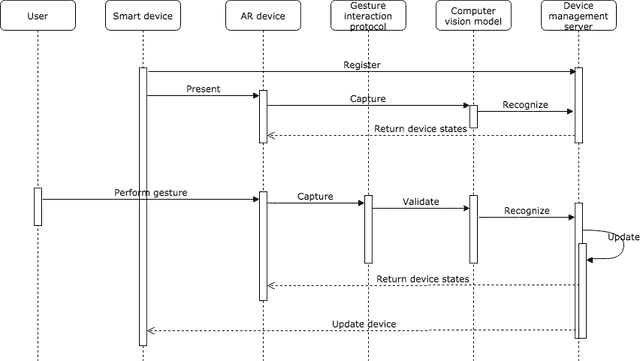

AR control system requires the functional components below:

- AR Device

- Device Management Server

- Computer Vision model

- Gesture Interaction protocol

- Smart Devices

The work flow for them working together is as below:

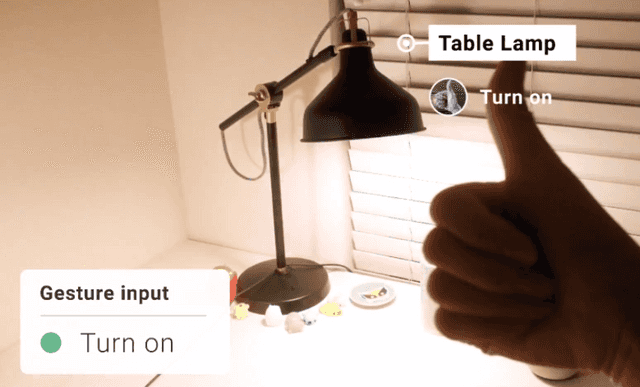

Users are at the center of the control system who initiate actions. When target device is present in the view, the interpreter will recognize it. User then confirms the intention to control, or move focus until intended target device is found. Once control intention is confirmed(through ”Ac- tivate” gesture, details in later section), Depending on the current states (a state of smart home device is a value that indicate its working status. For example, a lamp contains only two states, On and Off), a customized virtual interface will be rendered and overlay in the physical world by placing it near the target device. The interface then hints user to give specific control command, which will be recognized by the CV model. A valid command will change the value of target device states. Eventually, the device, which is subscribed to the server, will trigger wanted action.

Notes: As mentioned previously, the proposed system assumes that it is always-on and hands free. Thus there is no extra action needed to have the system started.

Implementation

After many compromises and experiments, the final system architecture is:

- AR Device

- Hardware: iPhone 6s plus (iOS 11.3)

- SDK: ARKit

- Device Management Server

- Firebase Realtime Database

- Computer Vision model

- Yolo V3 (self-trained, then migrated into Apple CoreML model)

- Smart Devices

- DIY automated lamp

- Google Home (serve as music player)

Demo

Control desk lamp

Control music player

Role in the Project

Independent Project, sole contributor

Note: many parts are skipped or simplified. If interested, please contact for full paper.

© 2022 ruanjian.io